Contents

sbt Reference Manual

sbt is a build tool for Scala, Java, and more. It requires Java 1.8 or later.

Install

See Installing sbt for the setup instructions.

Getting Started

To get started, please read the Getting Started Guide. You will save yourself a lot of time if you have the right understanding of the big picture up-front. All documentation may be found via the table of contents included on the left of every page.

See also Frequently asked question.

See How can I get help? for where you can get help about sbt. For discussing sbt development, use Discussions. To stay up to date about the news related to sbt, follow us @scala_sbt.

Features of sbt

- Little or no configuration required for simple projects

- Scala-based build definition that can use the full flexibility of Scala code

- Accurate incremental recompilation using information extracted from the compiler

- Library management support using Coursier

- Continuous compilation and testing with triggered execution

- Supports mixed Scala/Java projects

- Supports testing with ScalaCheck, specs, and ScalaTest. JUnit is supported by a plugin.

- Starts the Scala REPL with project classes and dependencies on the classpath

- Modularization supported with sub-projects

- External project support (list a git repository as a dependency!)

- Parallel task execution, including parallel test execution

Also

This documentation can be forked on GitHub. Feel free to make corrections and add documentation.

Documentation for 0.13.x has been archived here. This documentation applies to sbt 1.10.10.

See also the API Documentation, and the index of names and types.

Getting Started with sbt

sbt uses a small number of concepts to support flexible and powerful build definitions. There are not that many concepts, but sbt is not exactly like other build systems and there are details you will stumble on if you haven’t read the documentation.

The Getting Started Guide covers the concepts you need to know to create and maintain an sbt build definition.

It is highly recommended to read the Getting Started Guide!

If you are in a huge hurry, the most important conceptual background can be found in build definition, scopes, and task graph. But we don’t promise that it’s a good idea to skip the other pages in the guide.

It’s best to read in order, as later pages in the Getting Started Guide build on concepts introduced earlier.

Thanks for trying out sbt and have fun!

Installing sbt

To create an sbt project, you’ll need to take these steps:

- Install JDK (We recommend Eclipse Adoptium Temurin JDK 8, 11, or 17).

- Install sbt.

- Setup a simple hello world project

- Move on to running to learn how to run sbt.

- Then move on to .sbt build definition to learn more about build definitions.

Ultimately, the installation of sbt boils down to a launcher JAR and a shell script, but depending on your platform, we provide several ways to make the process less tedious. Head over to the installation steps for macOS, Windows, or Linux.

Tips and Notes

If you have any trouble running sbt, see Command line reference on JVM options.

Installing sbt on macOS

Install sbt with cs setup

Follow Install page, and install Scala using Coursier. This should install the latest stable version of sbt.

Install JDK

Follow the link to install JDK 8 or 11, or use SDKMAN!.

SDKMAN!

$ sdk install java $(sdk list java | grep -o "\b8\.[0-9]*\.[0-9]*\-tem" | head -1)

$ sdk install sbt

Installing from a universal package

Download ZIP or TGZ package, and expand it.

Installing from a third-party package

Note: Third-party packages may not provide the latest version. Please make sure to report any issues with these packages to the relevant maintainers.

Homebrew

$ brew install sbt

Installing sbt on Windows

Install sbt with cs setup

Follow Install page, and install Scala using Coursier. This should install the latest stable version of sbt.

Install JDK

Follow the link to install JDK 8 or 11.

Installing from a universal package

Download ZIP or TGZ package and expand it.

Windows installer

Download msi installer and install it.

Installing from a third-party package

Note: Third-party packages may not provide the latest version. Please make sure to report any issues with these packages to the relevant maintainers.

Scoop

$ scoop install sbt

Chocolatey

$ choco install sbt

Installing sbt on Linux

Install sbt with cs setup

Follow Install page, and install Scala using Coursier. This should install the latest stable version of sbt.

Installing from SDKMAN

To install both JDK and sbt, consider using SDKMAN.

$ sdk install java $(sdk list java | grep -o "\b8\.[0-9]*\.[0-9]*\-tem" | head -1)

$ sdk install sbt

Using Coursier or SDKMAN has two advantages.

- They will install the official packaging by Eclipse Adoptium, as opposed to the “mystery meat OpenJDK builds“.

- They will install

tgzpackaging of sbt that contains all JAR files. (DEB and RPM packages do not to save bandwidth)

Install JDK

You must first install a JDK. We recommend Eclipse Adoptium Temurin JDK 8, JDK 11, or JDK 17.

The details around the package names differ from one distribution to another. For example, Ubuntu xenial (16.04LTS) has openjdk-8-jdk. Redhat family calls it java-1.8.0-openjdk-devel.

Installing from a universal package

Download ZIP or TGZ package and expand it.

Ubuntu and other Debian-based distributions

DEB package is officially supported by sbt.

Ubuntu and other Debian-based distributions use the DEB format, but usually you don’t install your software from a local DEB file. Instead they come with package managers both for the command line (e.g. apt-get, aptitude) or with a graphical user interface (e.g. Synaptic).

Run the following from the terminal to install sbt (You’ll need superuser privileges to do so, hence the sudo).

sudo apt-get update

sudo apt-get install apt-transport-https curl gnupg -yqq

echo "deb https://repo.scala-sbt.org/scalasbt/debian all main" | sudo tee /etc/apt/sources.list.d/sbt.list

echo "deb https://repo.scala-sbt.org/scalasbt/debian /" | sudo tee /etc/apt/sources.list.d/sbt_old.list

curl -sL "https://keyserver.ubuntu.com/pks/lookup?op=get&search=0x2EE0EA64E40A89B84B2DF73499E82A75642AC823" | sudo -H gpg --no-default-keyring --keyring gnupg-ring:/etc/apt/trusted.gpg.d/scalasbt-release.gpg --import

sudo chmod 644 /etc/apt/trusted.gpg.d/scalasbt-release.gpg

sudo apt-get update

sudo apt-get install sbt

Package managers will check a number of configured repositories for packages to offer for installation. You just have to add the repository to the places your package manager will check.

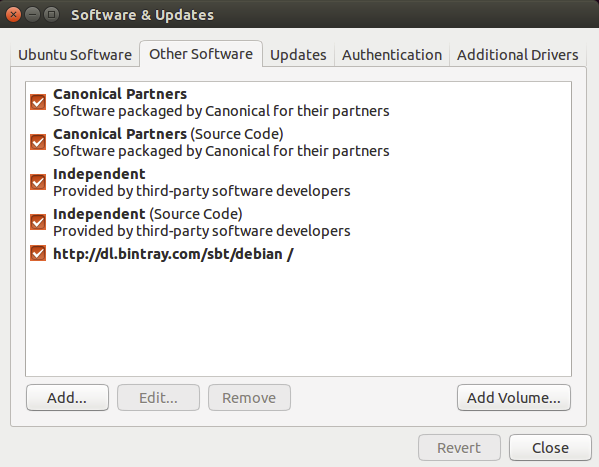

Once sbt is installed, you’ll be able to manage the package in aptitude or Synaptic after you updated their package cache. You should also be able to see the added repository at the bottom of the list in System Settings -> Software & Updates -> Other Software:

Note: There have been reports about SSL error using Ubuntu: Server access Error: java.lang.RuntimeException: Unexpected error: java.security.InvalidAlgorithmParameterException: the trustAnchors parameter must be non-empty url=https://repo1.maven.org/maven2/org/scala-sbt/sbt/1.1.0/sbt-1.1.0.pom, which apparently stems from OpenJDK 9 using PKCS12 format for /etc/ssl/certs/java/cacerts cert-bug. According to https://stackoverflow.com/a/50103533/3827 it is fixed in Ubuntu Cosmic (18.10), but Ubuntu Bionic LTS (18.04) is still waiting for a release. See the answer for a workaround.

Note: sudo apt-key adv --keyserver hkps://keyserver.ubuntu.com:443 --recv 2EE0EA64E40A89B84B2DF73499E82A75642AC823 may not work on Ubuntu Bionic LTS (18.04) since it’s using a buggy GnuPG, so we are advising to use web API to download the public key in the above.

Red Hat Enterprise Linux and other RPM-based distributions

RPM package is officially supported by sbt.

Red Hat Enterprise Linux and other RPM-based distributions use the RPM format.

Run the following from the terminal to install sbt (You’ll need superuser privileges to do so, hence the sudo).

# remove old Bintray repo file

sudo rm -f /etc/yum.repos.d/bintray-rpm.repo

curl -L https://www.scala-sbt.org/sbt-rpm.repo > sbt-rpm.repo

sudo mv sbt-rpm.repo /etc/yum.repos.d/

sudo yum install sbt

On Fedora (31 and above), use sbt-rpm.repo:

# remove old Bintray repo file

sudo rm -f /etc/yum.repos.d/bintray-rpm.repo

curl -L https://www.scala-sbt.org/sbt-rpm.repo > sbt-rpm.repo

sudo mv sbt-rpm.repo /etc/yum.repos.d/

sudo dnf install sbt

Note: Please report any issues with these to the sbt project.

Gentoo

The official tree contains ebuilds for sbt. To install the latest available version do:

emerge dev-java/sbt

sbt by example

This page assumes you’ve installed sbt 1.

Let’s start with examples rather than explaining how sbt works or why.

Create a minimum sbt build

$ mkdir foo-build

$ cd foo-build

$ touch build.sbt

Start sbt shell

$ sbt

[info] Updated file /tmp/foo-build/project/build.properties: set sbt.version to 1.9.3

[info] welcome to sbt 1.9.3 (Eclipse Adoptium Java 17.0.8)

[info] Loading project definition from /tmp/foo-build/project

[info] loading settings for project foo-build from build.sbt ...

[info] Set current project to foo-build (in build file:/tmp/foo-build/)

[info] sbt server started at local:///Users/eed3si9n/.sbt/1.0/server/abc4fb6c89985a00fd95/sock

[info] started sbt server

sbt:foo-build>

Exit sbt shell

To leave sbt shell, type exit or use Ctrl+D (Unix) or Ctrl+Z (Windows).

sbt:foo-build> exit

Compile a project

As a convention, we will use the sbt:...> or > prompt to mean that we’re in the sbt interactive shell.

$ sbt

sbt:foo-build> compile

Recompile on code change

Prefixing the compile command (or any other command) with ~ causes the command to be automatically

re-executed whenever one of the source files within the project is modified. For example:

sbt:foo-build> ~compile

[success] Total time: 0 s, completed 28 Jul 2023, 13:32:35

[info] 1. Monitoring source files for foo-build/compile...

[info] Press <enter> to interrupt or '?' for more options.

Create a source file

Leave the previous command running. From a different shell or in your file manager create in the foo-build

directory the following nested directories: src/main/scala/example. Then, create Hello.scala

in the example directory using your favorite editor as follows:

package example

object Hello {

def main(args: Array[String]): Unit = {

println("Hello")

}

}

This new file should be picked up by the running command:

[info] Build triggered by /tmp/foo-build/src/main/scala/example/Hello.scala. Running 'compile'.

[info] compiling 1 Scala source to /tmp/foo-build/target/scala-2.12/classes ...

[success] Total time: 0 s, completed 28 Jul 2023, 13:38:55

[info] 2. Monitoring source files for foo-build/compile...

[info] Press <enter> to interrupt or '?' for more options.

Press Enter to exit ~compile.

Run a previous command

From sbt shell, press up-arrow twice to find the compile command that you

executed at the beginning.

sbt:foo-build> compile

Getting help

Use the help command to get basic help about the available commands.

sbt:foo-build> help

<command> (; <command>)* Runs the provided semicolon-separated commands.

about Displays basic information about sbt and the build.

tasks Lists the tasks defined for the current project.

settings Lists the settings defined for the current project.

reload (Re)loads the current project or changes to plugins project or returns from it.

new Creates a new sbt build.

new Creates a new sbt build.

projects Lists the names of available projects or temporarily adds/removes extra builds to the session.

....

Display the description of a specific task:

sbt:foo-build> help run

Runs a main class, passing along arguments provided on the command line.

Run your app

sbt:foo-build> run

[info] running example.Hello

Hello

[success] Total time: 0 s, completed 28 Jul 2023, 13:40:31

Set ThisBuild / scalaVersion from sbt shell

sbt:foo-build> set ThisBuild / scalaVersion := "2.13.12"

[info] Defining ThisBuild / scalaVersion

[info] The new value will be used by Compile / bspBuildTarget, Compile / dependencyTreeCrossProjectId and 50 others.

[info] Run `last` for details.

[info] Reapplying settings...

[info] set current project to foo-build (in build file:/tmp/foo-build/)

Check the scalaVersion setting:

sbt:foo-build> scalaVersion

[info] 2.13.12

Save the session to build.sbt

We can save the ad-hoc settings using session save.

sbt:foo-build> session save

[info] Reapplying settings...

[info] set current project to foo-build (in build file:/tmp/foo-build/)

[warn] build source files have changed

[warn] modified files:

[warn] /tmp/foo-build/build.sbt

[warn] Apply these changes by running `reload`.

[warn] Automatically reload the build when source changes are detected by setting `Global / onChangedBuildSource := ReloadOnSourceChanges`.

[warn] Disable this warning by setting `Global / onChangedBuildSource := IgnoreSourceChanges`.

build.sbt file should now contain:

ThisBuild / scalaVersion := "2.13.12"

Name your project

Using an editor, change build.sbt as follows:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

lazy val hello = (project in file("."))

.settings(

name := "Hello"

)

Reload the build

Use the reload command to reload the build. The command causes the

build.sbt file to be re-read, and its settings applied.

sbt:foo-build> reload

[info] welcome to sbt 1.9.3 (Eclipse Adoptium Java 17.0.8)

[info] loading project definition from /tmp/foo-build/project

[info] loading settings for project hello from build.sbt ...

[info] set current project to Hello (in build file:/tmp/foo-build/)

sbt:Hello>

Note that the prompt has now changed to sbt:Hello>.

Add toolkit-test to libraryDependencies

Using an editor, change build.sbt as follows:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

lazy val hello = project

.in(file("."))

.settings(

name := "Hello",

libraryDependencies += "org.scala-lang" %% "toolkit-test" % "0.1.7" % Test

)

Use the reload command to reflect the change in build.sbt.

sbt:Hello> reload

Run tests

sbt:Hello> test

Run incremental tests continuously

sbt:Hello> ~testQuick

Write a test

Leaving the previous command running, create a file named src/test/scala/example/HelloSuite.scala

using an editor:

class HelloSuite extends munit.FunSuite {

test("Hello should start with H") {

assert("hello".startsWith("H"))

}

}

~testQuick should pick up the change:

[info] 2. Monitoring source files for hello/testQuick...

[info] Press <enter> to interrupt or '?' for more options.

[info] Build triggered by /tmp/foo-build/src/test/scala/example/HelloSuite.scala. Running 'testQuick'.

[info] compiling 1 Scala source to /tmp/foo-build/target/scala-2.13/test-classes ...

HelloSuite:

==> X HelloSuite.Hello should start with H 0.004s munit.FailException: /tmp/foo-build/src/test/scala/example/HelloSuite.scala:4 assertion failed

3: test("Hello should start with H") {

4: assert("hello".startsWith("H"))

5: }

at munit.FunSuite.assert(FunSuite.scala:11)

at HelloSuite.$anonfun$new$1(HelloSuite.scala:4)

[error] Failed: Total 1, Failed 1, Errors 0, Passed 0

[error] Failed tests:

[error] HelloSuite

[error] (Test / testQuick) sbt.TestsFailedException: Tests unsuccessful

Make the test pass

Using an editor, change src/test/scala/example/HelloSuite.scala to:

class HelloSuite extends munit.FunSuite {

test("Hello should start with H") {

assert("Hello".startsWith("H"))

}

}

Confirm that the test passes, then press Enter to exit the continuous test.

Add a library dependency

Using an editor, change build.sbt as follows:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

lazy val hello = project

.in(file("."))

.settings(

name := "Hello",

libraryDependencies ++= Seq(

"org.scala-lang" %% "toolkit" % "0.1.7",

"org.scala-lang" %% "toolkit-test" % "0.1.7" % Test

)

)

Use the reload command to reflect the change in build.sbt.

Use Scala REPL

We can find out the current weather in New York.

sbt:Hello> console

[info] Starting scala interpreter...

Welcome to Scala 2.13.12 (OpenJDK 64-Bit Server VM, Java 17).

Type in expressions for evaluation. Or try :help.

scala> :paste

// Entering paste mode (ctrl-D to finish)

import sttp.client4.quick._

import sttp.client4.Response

val newYorkLatitude: Double = 40.7143

val newYorkLongitude: Double = -74.006

val response: Response[String] = quickRequest

.get(

uri"https://api.open-meteo.com/v1/forecast?latitude=$newYorkLatitude&longitude=$newYorkLongitude¤t_weather=true"

)

.send()

println(ujson.read(response.body).render(indent = 2))

// press Ctrl+D

// Exiting paste mode, now interpreting.

{

"latitude": 40.710335,

"longitude": -73.99307,

"generationtime_ms": 0.36704540252685547,

"utc_offset_seconds": 0,

"timezone": "GMT",

"timezone_abbreviation": "GMT",

"elevation": 51,

"current_weather": {

"temperature": 21.3,

"windspeed": 16.7,

"winddirection": 205,

"weathercode": 3,

"is_day": 1,

"time": "2023-08-04T10:00"

}

}

import sttp.client4.quick._

import sttp.client4.Response

val newYorkLatitude: Double = 40.7143

val newYorkLongitude: Double = -74.006

val response: sttp.client4.Response[String] = Response({"latitude":40.710335,"longitude":-73.99307,"generationtime_ms":0.36704540252685547,"utc_offset_seconds":0,"timezone":"GMT","timezone_abbreviation":"GMT","elevation":51.0,"current_weather":{"temperature":21.3,"windspeed":16.7,"winddirection":205.0,"weathercode":3,"is_day":1,"time":"2023-08-04T10:00"}},200,,List(:status: 200, content-encoding: deflate, content-type: application/json; charset=utf-8, date: Fri, 04 Aug 2023 10:09:11 GMT),List(),RequestMetadata(GET,https://api.open-meteo.com/v1/forecast?latitude=40.7143&longitude...

scala> :q // to quit

Make a subproject

Change build.sbt as follows:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

lazy val hello = project

.in(file("."))

.settings(

name := "Hello",

libraryDependencies ++= Seq(

"org.scala-lang" %% "toolkit" % "0.1.7",

"org.scala-lang" %% "toolkit-test" % "0.1.7" % Test

)

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core"

)

Use the reload command to reflect the change in build.sbt.

List all subprojects

sbt:Hello> projects

[info] In file:/tmp/foo-build/

[info] * hello

[info] helloCore

Compile the subproject

sbt:Hello> helloCore/compile

Add toolkit-test to the subproject

Change build.sbt as follows:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

val toolkitTest = "org.scala-lang" %% "toolkit-test" % "0.1.7"

lazy val hello = project

.in(file("."))

.settings(

name := "Hello",

libraryDependencies ++= Seq(

"org.scala-lang" %% "toolkit" % "0.1.7",

toolkitTest % Test

)

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core",

libraryDependencies += toolkitTest % Test

)

Broadcast commands

Set aggregate so that the command sent to hello is broadcast to helloCore too:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

val toolkitTest = "org.scala-lang" %% "toolkit-test" % "0.1.7"

lazy val hello = project

.in(file("."))

.aggregate(helloCore)

.settings(

name := "Hello",

libraryDependencies ++= Seq(

"org.scala-lang" %% "toolkit" % "0.1.7",

toolkitTest % Test

)

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core",

libraryDependencies += toolkitTest % Test

)

After reload, ~testQuick now runs on both subprojects:

sbt:Hello> ~testQuick

Press Enter to exit the continuous test.

Make hello depend on helloCore

Use .dependsOn(...) to add a dependency on other subprojects. Also let’s move the toolkit dependency to helloCore.

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

val toolkitTest = "org.scala-lang" %% "toolkit-test" % "0.1.7"

lazy val hello = project

.in(file("."))

.aggregate(helloCore)

.dependsOn(helloCore)

.settings(

name := "Hello",

libraryDependencies += toolkitTest % Test

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core",

libraryDependencies += "org.scala-lang" %% "toolkit" % "0.1.7",

libraryDependencies += toolkitTest % Test

)

Parse JSON using uJson

Let’s use uJson from the toolkit in helloCore.

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

val toolkitTest = "org.scala-lang" %% "toolkit-test" % "0.1.7"

lazy val hello = project

.in(file("."))

.aggregate(helloCore)

.dependsOn(helloCore)

.settings(

name := "Hello",

libraryDependencies += toolkitTest % Test

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core",

libraryDependencies += "org.scala-lang" %% "toolkit" % "0.1.7",

libraryDependencies += toolkitTest % Test

)

After reload, add core/src/main/scala/example/core/Weather.scala:

package example.core

import sttp.client4.quick._

import sttp.client4.Response

object Weather {

def temp() = {

val response: Response[String] = quickRequest

.get(

uri"https://api.open-meteo.com/v1/forecast?latitude=40.7143&longitude=-74.006¤t_weather=true"

)

.send()

val json = ujson.read(response.body)

json.obj("current_weather")("temperature").num

}

}

Next, change src/main/scala/example/Hello.scala as follows:

package example

import example.core.Weather

object Hello {

def main(args: Array[String]): Unit = {

val temp = Weather.temp()

println(s"Hello! The current temperature in New York is $temp C.")

}

}

Let’s run the app to see if it worked:

sbt:Hello> run

[info] compiling 1 Scala source to /tmp/foo-build/core/target/scala-2.13/classes ...

[info] compiling 1 Scala source to /tmp/foo-build/target/scala-2.13/classes ...

[info] running example.Hello

Hello! The current temperature in New York is 22.7 C.

Add sbt-native-packager plugin

Using an editor, create project/plugins.sbt:

addSbtPlugin("com.github.sbt" % "sbt-native-packager" % "1.9.4")

Next change build.sbt as follows to add JavaAppPackaging:

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

val toolkitTest = "org.scala-lang" %% "toolkit-test" % "0.1.7"

lazy val hello = project

.in(file("."))

.aggregate(helloCore)

.dependsOn(helloCore)

.enablePlugins(JavaAppPackaging)

.settings(

name := "Hello",

libraryDependencies += toolkitTest % Test,

maintainer := "A Scala Dev!"

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core",

libraryDependencies += "org.scala-lang" %% "toolkit" % "0.1.7",

libraryDependencies += toolkitTest % Test

)

Reload and create a .zip distribution

sbt:Hello> reload

...

sbt:Hello> dist

[info] Wrote /private/tmp/foo-build/target/scala-2.13/hello_2.13-0.1.0-SNAPSHOT.pom

[info] Main Scala API documentation to /tmp/foo-build/target/scala-2.13/api...

[info] Main Scala API documentation successful.

[info] Main Scala API documentation to /tmp/foo-build/core/target/scala-2.13/api...

[info] Wrote /tmp/foo-build/core/target/scala-2.13/hello-core_2.13-0.1.0-SNAPSHOT.pom

[info] Main Scala API documentation successful.

[success] All package validations passed

[info] Your package is ready in /tmp/foo-build/target/universal/hello-0.1.0-SNAPSHOT.zip

Here’s how you can run the packaged app:

$ /tmp/someother

$ cd /tmp/someother

$ unzip -o -d /tmp/someother /tmp/foo-build/target/universal/hello-0.1.0-SNAPSHOT.zip

$ ./hello-0.1.0-SNAPSHOT/bin/hello

Hello! The current temperature in New York is 22.7 C.

Dockerize your app

Note that a Docker daemon will need to be running in order for this to work.

sbt:Hello> Docker/publishLocal

....

[info] Built image hello with tags [0.1.0-SNAPSHOT]

Here’s how to run the Dockerized app:

$ docker run hello:0.1.0-SNAPSHOT

Hello! The current temperature in New York is 22.7 C.

Set the version

Change build.sbt as follows:

ThisBuild / version := "0.1.0"

ThisBuild / scalaVersion := "2.13.12"

ThisBuild / organization := "com.example"

val toolkitTest = "org.scala-lang" %% "toolkit-test" % "0.1.7"

lazy val hello = project

.in(file("."))

.aggregate(helloCore)

.dependsOn(helloCore)

.enablePlugins(JavaAppPackaging)

.settings(

name := "Hello",

libraryDependencies += toolkitTest % Test,

maintainer := "A Scala Dev!"

)

lazy val helloCore = project

.in(file("core"))

.settings(

name := "Hello Core",

libraryDependencies += "org.scala-lang" %% "toolkit" % "0.1.7",

libraryDependencies += toolkitTest % Test

)

Switch scalaVersion temporarily

sbt:Hello> ++3.3.1!

[info] Forcing Scala version to 3.3.1 on all projects.

[info] Reapplying settings...

[info] Set current project to Hello (in build file:/tmp/foo-build/)

Check the scalaVersion setting:

sbt:Hello> scalaVersion

[info] helloCore / scalaVersion

[info] 3.3.1

[info] scalaVersion

[info] 3.3.1

This setting will go away after reload.

Inspect the dist task

To find out more about dist, try help and inspect.

sbt:Hello> help dist

Creates the distribution packages.

sbt:Hello> inspect dist

To call inspect recursively on the dependency tasks use inspect tree.

sbt:Hello> inspect tree dist

[info] dist = Task[java.io.File]

[info] +-Universal / dist = Task[java.io.File]

....

Batch mode

You can also run sbt in batch mode, passing sbt commands directly from the terminal.

$ sbt clean "testOnly HelloSuite"

Note: Running in batch mode requires JVM spinup and JIT each time,

so your build will run much slower.

For day-to-day coding, we recommend using the sbt shell

or a continuous test like ~testQuick.

sbt new command

You can use the sbt new command to quickly setup a simple “Hello world” build.

$ sbt new scala/scala-seed.g8

....

A minimal Scala project.

name [My Something Project]: hello

Template applied in ./hello

When prompted for the project name, type hello.

This will create a new project under a directory named hello.

Credits

This page is based on the Essential sbt tutorial written by William “Scala William” Narmontas.

Directory structure

This page assumes you’ve installed sbt and seen sbt by example.

Base directory

In sbt’s terminology, the “base directory” is the directory containing

the project. So if you created a project hello containing

/tmp/foo-build/build.sbt as in the sbt by example,

/tmp/foo-build is your base directory.

Source code

sbt uses the same directory structure as Maven for source files by default (all paths are relative to the base directory):

src/

main/

resources/

<files to include in main jar here>

scala/

<main Scala sources>

scala-2.12/

<main Scala 2.12 specific sources>

java/

<main Java sources>

test/

resources

<files to include in test jar here>

scala/

<test Scala sources>

scala-2.12/

<test Scala 2.12 specific sources>

java/

<test Java sources>

Other directories in src/ will be ignored. Additionally, all hidden

directories will be ignored.

Source code can be placed in the project’s base directory as

hello/app.scala, which may be OK for small projects,

though for normal projects people tend to keep the projects in

the src/main/ directory to keep things neat.

The fact that you can place *.scala source code in the base directory might seem like

an odd trick, but this fact becomes relevant later.

sbt build definition files

The build definition is described in build.sbt (actually any files named *.sbt) in the project’s base directory.

build.sbt

Build support files

In addition to build.sbt, project directory can contain .scala files

that define helper objects and one-off plugins.

See organizing the build for more.

build.sbt

project/

Dependencies.scala

You may see .sbt files inside project/ but they are not equivalent to

.sbt files in the project’s base directory. Explaining this will

come later, since you’ll need some background information first.

Build products

Generated files (compiled classes, packaged jars, managed files, caches,

and documentation) will be written to the target directory by default.

Configuring version control

Your .gitignore (or equivalent for other version control systems) should

contain:

target/

Note that this deliberately has a trailing / (to match only directories)

and it deliberately has no leading / (to match project/target/ in

addition to plain target/).

Running

This page describes how to use sbt once you have set up your project. It assumes you’ve installed sbt and went through sbt by example.

sbt shell

Run sbt in your project directory with no arguments:

$ sbt

Running sbt with no command line arguments starts sbt shell. sbt shell has a command prompt (with tab completion and history!).

For example, you could type compile at the sbt shell:

> compile

To compile again, press up arrow and then enter.

To run your program, type run.

To leave sbt shell, type exit or use Ctrl+D (Unix) or Ctrl+Z

(Windows).

Batch mode

You can also run sbt in batch mode, specifying a space-separated list of sbt commands as arguments. For sbt commands that take arguments, pass the command and arguments as one argument to sbt by enclosing them in quotes. For example,

$ sbt clean compile "testOnly TestA TestB"

In this example, testOnly has arguments, TestA and TestB. The commands

will be run in sequence (clean, compile, then testOnly).

Note: Running in batch mode requires JVM spinup and JIT each time, so your build will run much slower. For day-to-day coding, we recommend using the sbt shell or Continuous build and test feature described below.

Beginning in sbt 0.13.16, using batch mode in sbt will issue an informational startup message,

$ sbt clean compile

[info] Executing in batch mode. For better performance use sbt's shell

...

It will only be triggered for sbt compile, and it can also be

suppressed with suppressSbtShellNotification := true.

Continuous build and test

To speed up your edit-compile-test cycle, you can ask sbt to automatically recompile or run tests whenever you save a source file.

Make a command run when one or more source files change by prefixing the

command with ~. For example, in sbt shell try:

> ~testQuick

Press enter to stop watching for changes.

You can use the ~ prefix with either sbt shell or batch mode.

See Triggered Execution for more details.

Common commands

Here are some of the most common sbt commands. For a more complete list, see Command Line Reference.

| Command | Description |

|---|---|

| clean | Deletes all generated files (in the target directory). |

| compile | Compiles the main sources (in src/main/scala and src/main/java directories). |

| test | Compiles and runs all tests. |

| console | Starts the Scala interpreter with a classpath including the compiled sources and all dependencies. To return to sbt, type :quit, Ctrl+D (Unix), or Ctrl+Z (Windows). |

| Runs the main class for the project in the same virtual machine as sbt. | |

| package | Creates a jar file containing the files in src/main/resources and the classes compiled from src/main/scala and src/main/java. |

| help <command> | Displays detailed help for the specified command. If no command is provided, displays brief descriptions of all commands. |

| reload | Reloads the build definition (build.sbt, project/*.scala, project/*.sbt files). Needed if you change the build definition. |

Tab completion

sbt shell has tab completion, including at an empty prompt. A special sbt convention is that pressing tab once may show only a subset of most likely completions, while pressing it more times shows more verbose choices.

sbt shell history

sbt shell remembers history even if you exit sbt and restart it. The easiest way to access history is to press the up arrow key to cycle through previously entered commands.

Note: Ctrl-R incrementally searches the history backwards.

Through JLine’s integration with the terminal environment,

you can customize sbt shell by changing $HOME/.inputrc file.

For example, the following settings in $HOME/.inputrc will allow up- and down-arrow to perform

prefix-based search of the history.

"\e[A": history-search-backward

"\e[B": history-search-forward

"\e[C": forward-char

"\e[D": backward-char

sbt shell also supports the following commands:

| Command | Description |

|---|---|

| ! | Show history command help. |

| !! | Execute the previous command again. |

| !: | Show all previous commands. |

| !:n | Show the last n commands. |

| !n | Execute the command with index n, as shown by the !: command. |

| !-n | Execute the nth command before this one. |

| !string | Execute the most recent command starting with 'string.' |

| !?string | Execute the most recent command containing 'string.' |

IDE Integration

While it’s possible to code Scala with just an editor and sbt, most programmers today use an Integrated Development Environment, or IDE for short. Two of the popular IDEs in Scala are Metals and IntelliJ IDEA, and they both integrate with sbt builds.

- Using sbt as Metals build server

- Importing to IntelliJ IDEA

- Using sbt as IntelliJ IDEA build server

- Using Neovim as Metals frontend

Using sbt as Metals build server

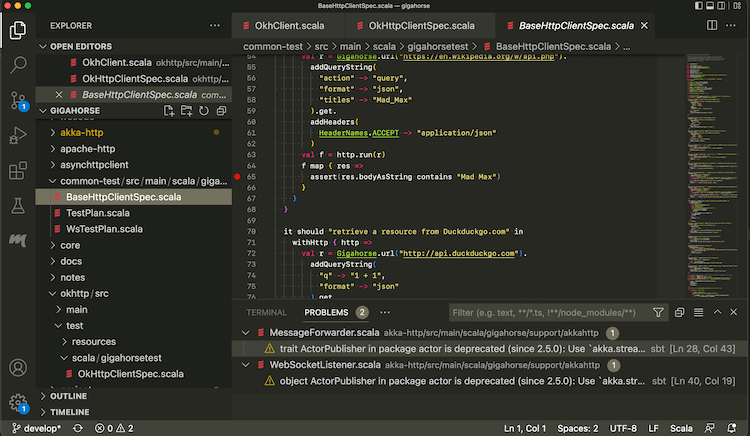

Metals is an open source language server for Scala, which can act as the backend for VS Code and other editors that support LSP. Metals in turn supports different build servers including sbt via the Build Server Protocol (BSP).

To use Metals on VS Code:

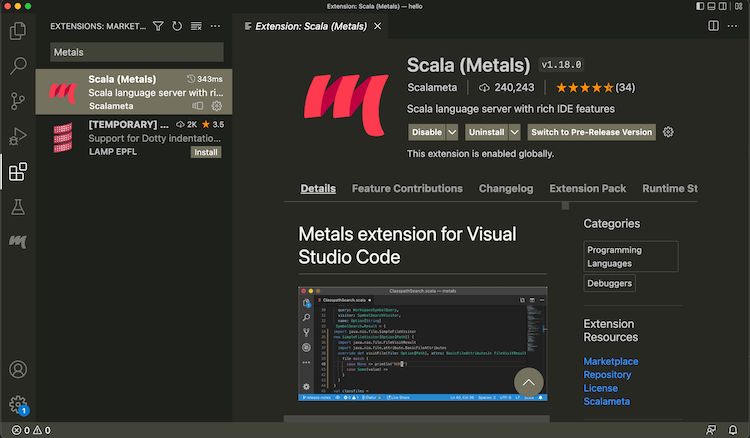

- Install Metals from Extensions tab:

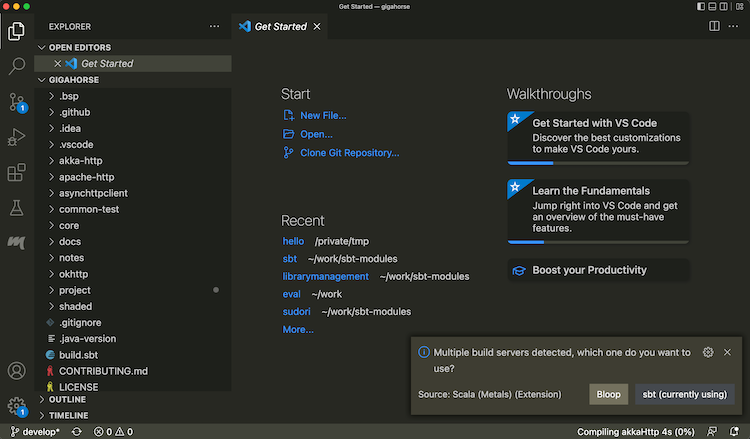

- Open a directory containing a

build.sbtfile. - From the menubar, run View > Command Palette… (

Cmd-Shift-Pon macOS) “Metals: Switch build server”, and select “sbt”

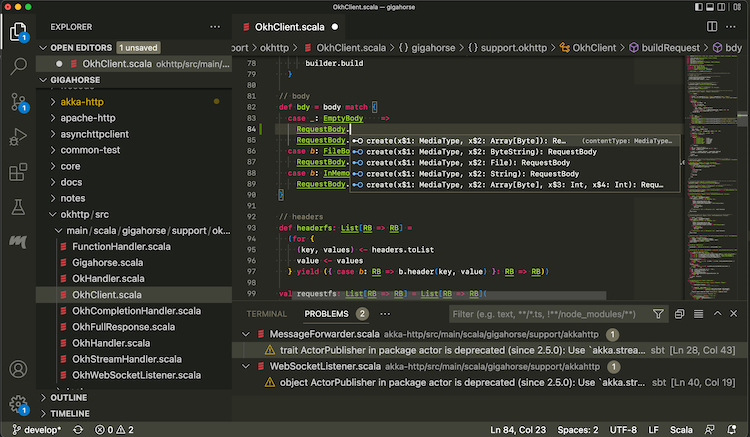

- Once the import process is complete, open a Scala file to see that code completion works:

Use the following setting to opt-out some of the subprojects from BSP.

bspEnabled := false

When you make changes to the code and save them (Cmd-S on macOS), Metals will invoke sbt to do

the actual building work.

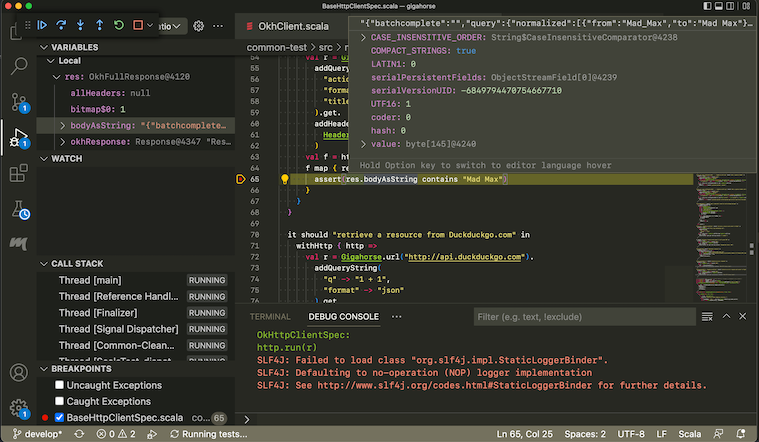

Interactive debugging on VS Code

- Metals supports interactive debugging by setting break points in the code:

- Interactive debugging can be started by right-clicking on an unit test, and selecting “Debug Test.”

When the test hits a break point, you can inspect the values of the variables:

See Debugging page on VS Code documentation for more details on how to navigate an interactive debugging session.

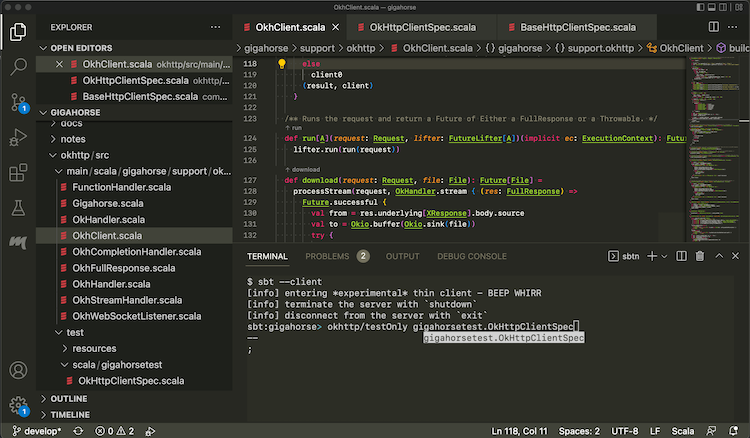

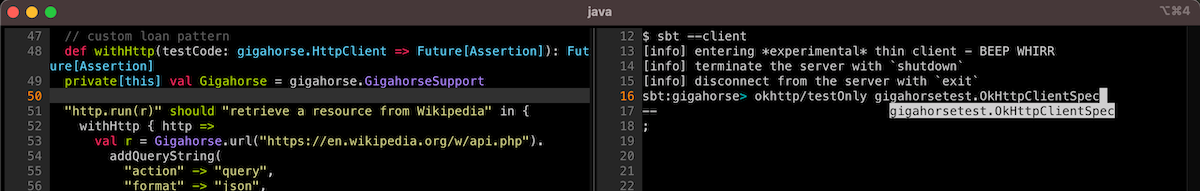

Logging into sbt session

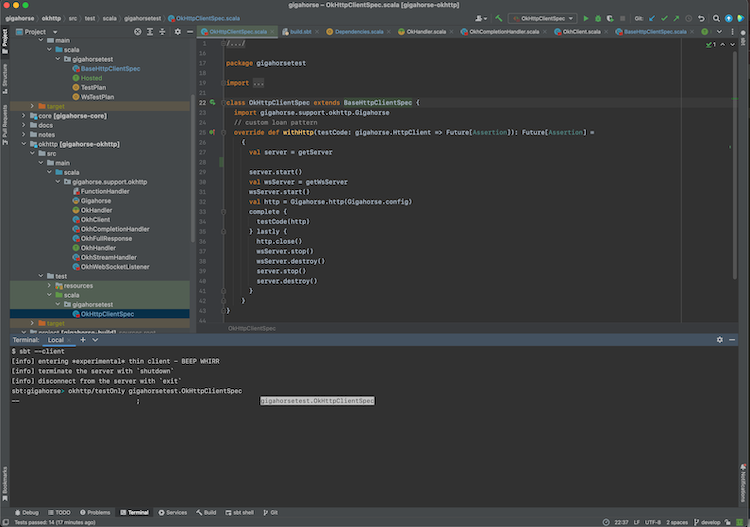

While Metals uses sbt as the build server, we can also log into the same sbt session using a thin client.

- From Terminal section, type in

sbt --client

This lets you log into the sbt session Metals has started. In there you can call testOnly and other tasks with

the code already compiled.

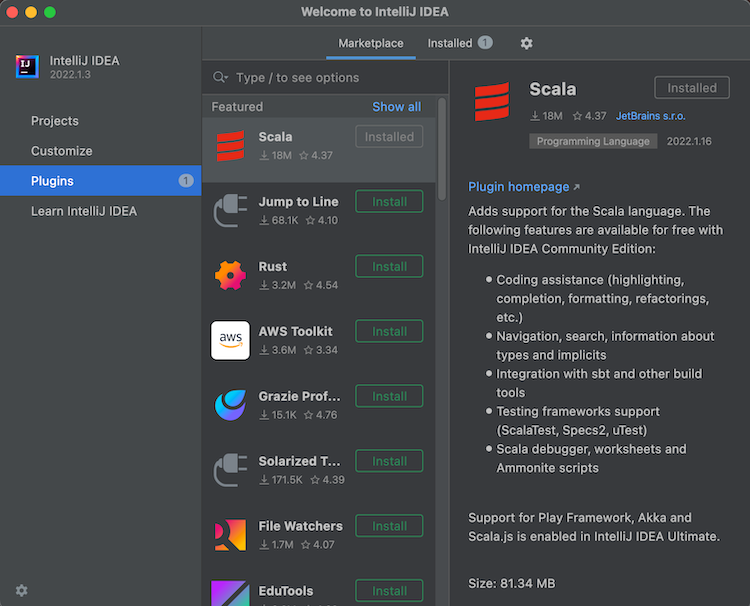

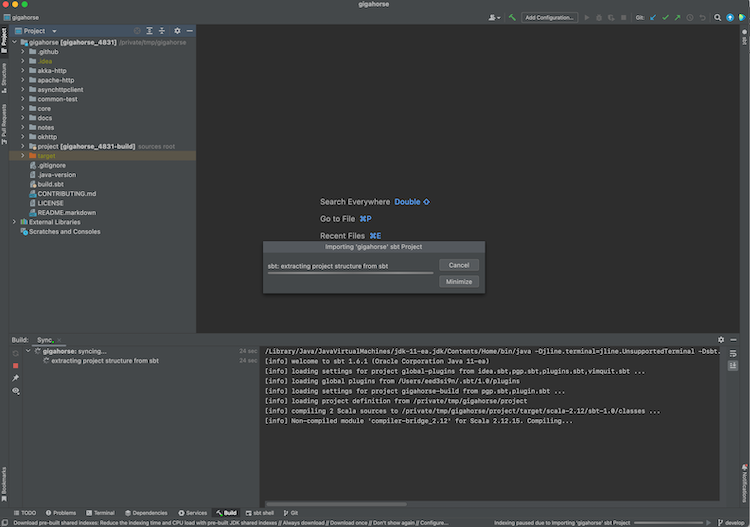

Importing to IntelliJ IDEA

IntelliJ IDEA is an IDE created by JetBrains, and the Community Edition is open source under Apache v2 license. IntelliJ integrates with many build tools, including sbt, to import the project. This is a more traditional approach that might be more reliable than using BSP approach.

To import a build to IntelliJ IDEA:

- Install Scala plugin on the Plugins tab:

- From Projects, open a directory containing a

build.sbtfile.

- Once the import process is complete, open a Scala file to see that code completion works.

IntelliJ Scala plugin uses its own lightweight compilation engine to detect errors, which is fast but sometimes incorrect. Per compiler-based highlighting, IntelliJ can be configured to use the Scala compiler for error highlighting.

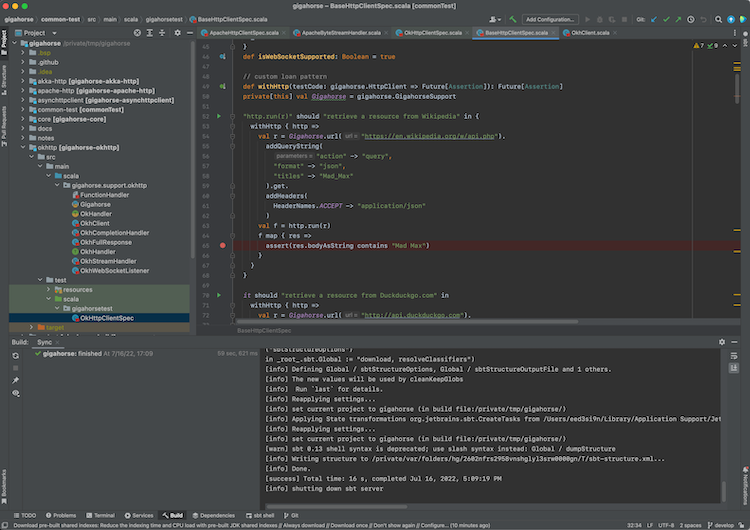

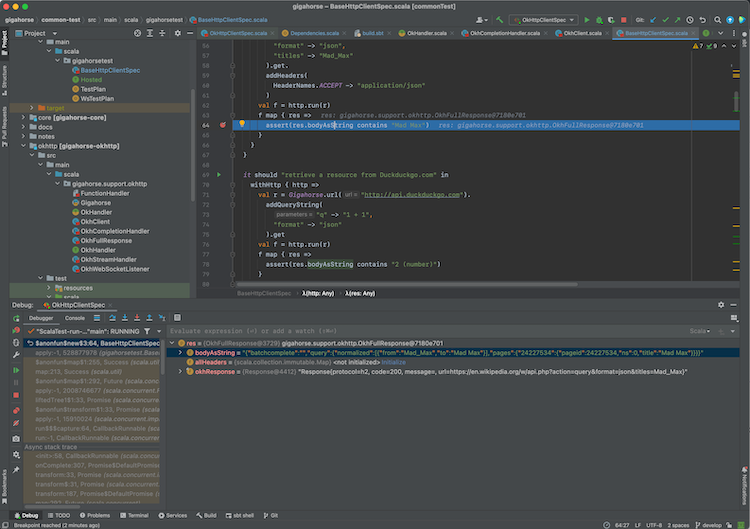

Interactive debugging with IntelliJ IDEA

- IntelliJ supports interactive debugging by setting break points in the code:

- Interactive debugging can be started by right-clicking on an unit test, and selecting “Debug ‘<test name>‘.”

Alternatively, you can click the green “run” icon on the left part of the editor near the unit test.

When the test hits a break point, you can inspect the values of the variables:

See Debug Code page on IntelliJ documentation for more details on how to navigate an interactive debugging session.

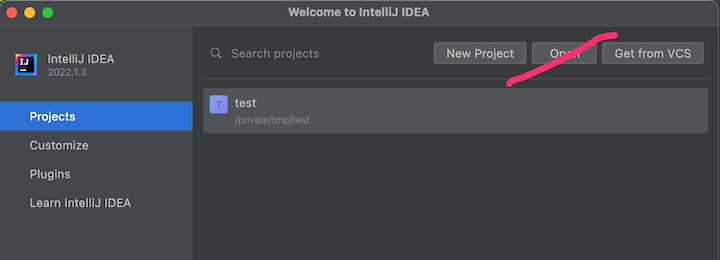

Using sbt as IntelliJ IDEA build server (advanced)

Importing the build to IntelliJ means that you’re effectively using IntelliJ as the build tool and the compiler while you code (see also compiler-based highlighting). While many users are happy with the experience, depending on the code base some of the compilation errors may be false, it may not work well with plugins that generate sources, and generally you might want to code with the identical build semantics as sbt. Thankfully, modern IntelliJ supports alternative build servers including sbt via the Build Server Protocol (BSP).

The benefit of using BSP with IntelliJ is that you’re using sbt to do the actual build work, so if you are the kind of programmer who had sbt session up on the side, this avoids double compilation.

| Import to IntelliJ | BSP with IntelliJ | |

|---|---|---|

| Reliability | ✅ Reliable behavior | ⚠️ Less mature. Might encounter UX issues. |

| Responsiveness | ✅ | ⚠️ |

| Correctness | ⚠️ Uses its own compiler for type checking, but can be configured to use scalac | ✅ Uses Zinc + Scala compiler for type checking |

| Generated source | ❌ Generated source requires resync | ✅ |

| Build reuse | ❌ Using sbt side-by-side requires double build | ✅ |

To use sbt as build server on IntelliJ:

- Install Scala plugin on the Plugins tab.

- To use the BSP approach, do not use Open button on the Project tab:

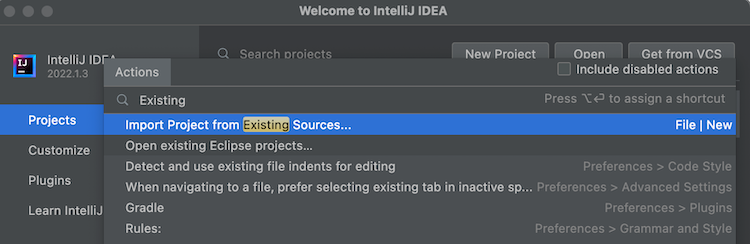

- From menubar, click New > “Project From Existing Sources”, or Find Action (

Cmd-Shift-Pon macOS) and type “Existing” to find “Import Project From Existing Sources”:

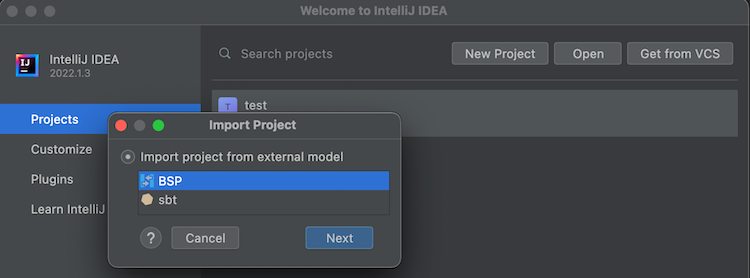

- Open a

build.sbtfile. Select BSP when prompted:

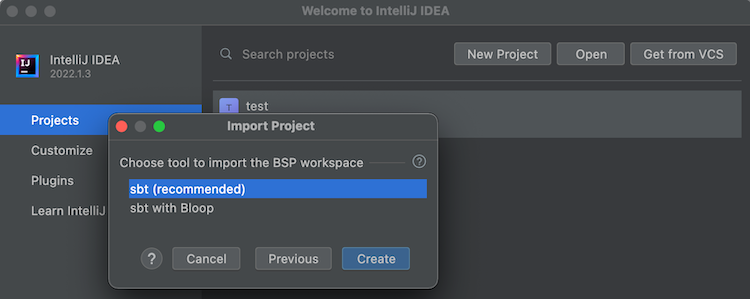

- Select sbt (recommended) as the tool to import the BSP workspace:

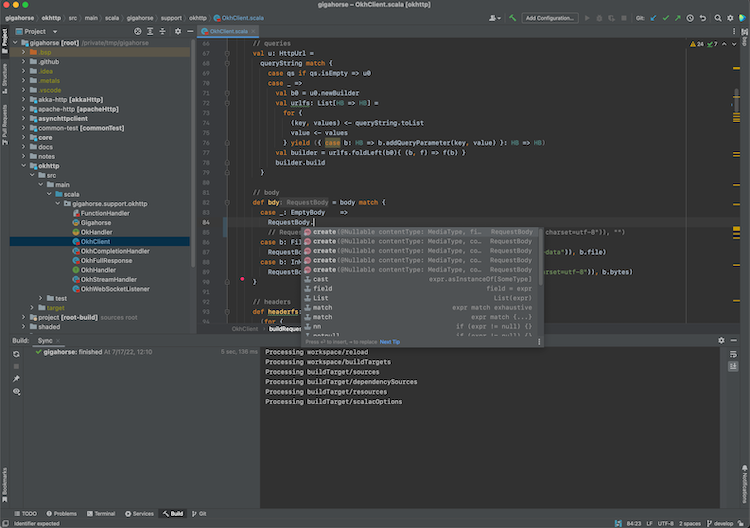

- Once the import process is complete, open a Scala file to see that code completion works:

Use the following setting to opt-out some of the subprojects from BSP.

bspEnabled := false

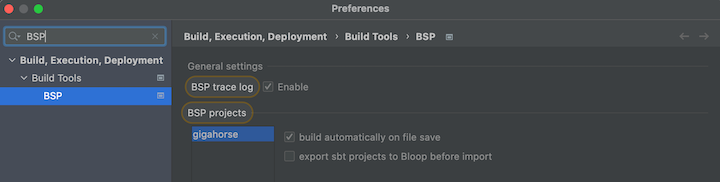

- Open Preferences, search BSP and check “build automatically on file save”, and uncheck “export sbt projects to Bloop before import”:

When you make changes to the code and save them (Cmd-S on macOS), IntelliJ will invoke sbt to do

the actual building work.

See also Igal Tabachnik’s Using BSP effectively in IntelliJ and Scala for more details.

Logging into sbt session

We can also log into the existing sbt session using the thin client.

- From Terminal section, type in

sbt --client

This lets you log into the sbt session IntelliJ has started. In there you can call testOnly and other tasks with

the code already compiled.

Using Neovim as Metals frontend (advanced)

Neovim is a modern fork of Vim that supports LSP out-of-box, which means it can be configured as a frontend for Metals.

Chris Kipp, who is a maintainer of Metals, created nvim-metals plugin that provides comprehensive Metals support on Neovim. To install nvim-metals, create lsp.lua under $XDG_CONFIG_HOME/nvim/lua/ based on Chris’s lsp.lua and adjust to your preference. For example, comment out its plugins section and load the listed plugins using the plugin manager of your choice such as vim-plug.

In init.vim, the file can be loaded as:

lua << END

require('lsp')

END

Per lsp.lua, g:metals_status should be displayed on the status line, which can be done using lualine.nvim etc.

- Next, open a Scala file in an sbt build using Neovim.

- Run

:MetalsInstallwhen prompted. - Run

:MetalsStartServer. - If the status line is set up, you should see something like “Connecting to sbt” or “Indexing.”

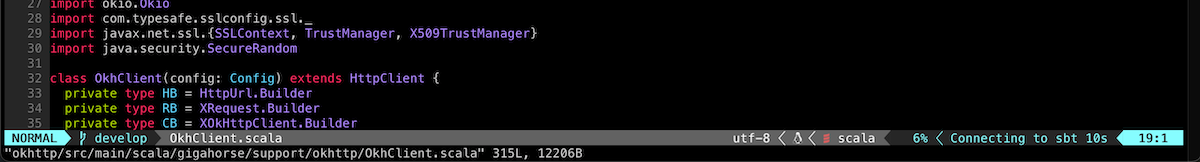

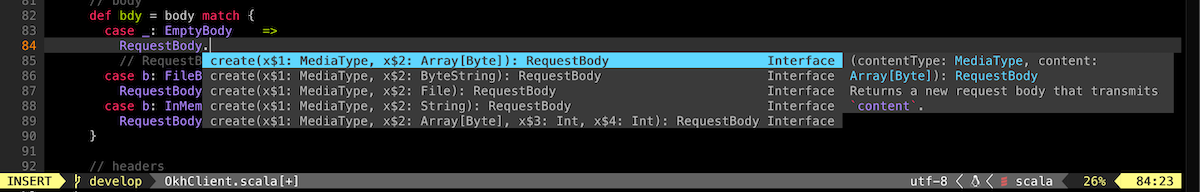

- Code completion works when you’re in Insert mode, and you can tab through the candidates:

- A build is triggered upon saving changes, and compilation errors are displayed inline:

Go to definition

- You can jump to definition of the symbol under cursor by using

gD(exact keybinding can be customized):

- Use

Ctrl-Oto return to the old buffer.

Hover

- To display the type information of the symbol under cursor, like hovering, use

Kin Normal mode:

Listing diagnostics

- To list all compilation errors and warnings, use

<leader>aa:

- Since this is in the standard quickfix list, you can use the command such as

:cnextand:cprevto nagivate through the errors and warnings. - To list just the errors, use

<leader>ae.

Interactive debugging with Neovim

- Thanks to nvim-dap, Neovim supports interactive debugging. Set break points in the code using

<leader>dt:

- Nagivate to a unit test, confirm that it’s built by hovering (

K), and then “debug continue” (<leader>dc) to start a debugger. Choose “1: RunOrTest” when prompted. - When the test hits a break point, you can inspect the values of the variables by debug hovering (

<leader>dK):

- “debug continue” (

<leader>dc) again to end the session.

See nvim-metals regarding further details.

Logging into sbt session

We can also log into the existing sbt session using the thin client.

- In a new vim window type

:terminalto start the built-in terminal. - Type in

sbt --client

Even though it’s inside Neovim, tab completion etc works fine inside.

Build definition

This page describes sbt build definitions, including some “theory” and

the syntax of build.sbt.

It assumes you have installed a recent version of sbt, such as sbt 1.10.10,

know how to use sbt,

and have read the previous pages in the Getting Started Guide.

This page discusses the build.sbt build definition.

Specifying the sbt version

As part of your build definition you will specify the version of

sbt that your build uses.

This allows people with different versions of the sbt launcher to

build the same projects with consistent results.

To do this, create a file named project/build.properties that specifies the sbt version as follows:

sbt.version=1.10.10

If the required version is not available locally,

the sbt launcher will download it for you.

If this file is not present, the sbt launcher will choose an arbitrary version,

which is discouraged because it makes your build non-portable.

What is a build definition?

A build definition is defined in build.sbt,

and it consists of a set of projects (of type Project).

Because the term project can be ambiguous,

we often call it a subproject in this guide.

For instance, in build.sbt you define

the subproject located in the current directory like this:

lazy val root = (project in file("."))

.settings(

name := "Hello",

scalaVersion := "2.12.7"

)

Each subproject is configured by key-value pairs.

For example, one key is name and it maps to a string value, the name of

your subproject.

The key-value pairs are listed under the .settings(...) method as follows:

lazy val root = (project in file("."))

.settings(

name := "Hello",

scalaVersion := "2.12.7"

)

How build.sbt defines settings

build.sbt defines subprojects, which holds a sequence of key-value pairs

called setting expressions using build.sbt domain-specific language (DSL).

ThisBuild / organization := "com.example"

ThisBuild / scalaVersion := "2.12.18"

ThisBuild / version := "0.1.0-SNAPSHOT"

lazy val root = (project in file("."))

.settings(

name := "hello"

)

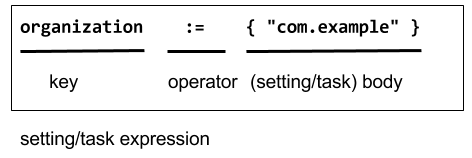

Let’s take a closer look at the build.sbt DSL:

Each entry is called a setting expression.

Some among them are also called task expressions.

We will see more on the difference later in this page.

A setting expression consists of three parts:

- Left-hand side is a key.

- Operator, which in this case is

:= - Right-hand side is called the body, or the setting body.

On the left-hand side, name, version, and scalaVersion are keys.

A key is an instance of

SettingKey[T],

TaskKey[T], or

InputKey[T] where T is the

expected value type. The kinds of key are explained below.

Because key name is typed to SettingKey[String],

the := operator on name is also typed specifically to String.

If you use the wrong value type, the build definition will not compile:

lazy val root = (project in file("."))

.settings(

name := 42 // will not compile

)

build.sbt may also be

interspersed with vals, lazy vals, and defs. Top-level objects and

classes are not allowed in build.sbt. Those should go in the project/

directory as Scala source files.

Keys

Types

There are three flavors of key:

SettingKey[T]: a key for a value evaluated only once (the value is computed when loading the subproject, and kept around).TaskKey[T]: a key for a value, called a task, that is evaluated each time it’s referred to (similarly to a scala function), potentially with side effects.InputKey[T]: a key for a task that has command line arguments as input. Check out Input Tasks for more details.

Built-in Keys

The built-in keys are just fields in an object called

Keys. A build.sbt implicitly has an

import sbt.Keys._, so sbt.Keys.name can be referred to as name.

Custom Keys

Custom keys may be defined with their respective creation methods:

settingKey, taskKey, and inputKey. Each method expects the type of the

value associated with the key as well as a description. The name of the

key is taken from the val the key is assigned to. For example, to define

a key for a new task called hello,

lazy val hello = taskKey[Unit]("An example task")

Here we have used the fact that an .sbt file can contain vals and defs

in addition to settings. All such definitions are evaluated before

settings regardless of where they are defined in the file.

Note: Typically, lazy vals are used instead of vals to avoid initialization order problems.

Task vs Setting keys

A TaskKey[T] is said to define a task. Tasks are operations such as

compile or package. They may return Unit (Unit is void for Scala), or

they may return a value related to the task, for example package is a

TaskKey[File] and its value is the jar file it creates.

Each time you start a task execution, for example by typing compile at

the interactive sbt prompt, sbt will re-run any tasks involved exactly

once.

sbt’s key-value pairs describing the subproject can keep around a fixed string value

for a setting such as name, but it has to keep around some executable

code for a task such as compile — even if that executable code

eventually returns a string, it has to be re-run every time.

A given key always refers to either a task or a plain setting. That is, “taskiness” (whether to re-run each time) is a property of the key, not the value.

Listing all available setting keys and task keys

The list of settings keys that currently exist in your build definition

can be obtained by typing settings or settings -v at the sbt prompt.

Likewise, the list of tasks keys currently defined can be obtained by typing

tasks or tasks -v. You can also have a look at

Command Line Reference for a discussion on built-in

tasks commonly used at the sbt prompt.

A key will be printed in the resulting list if:

- it’s built-in sbt (like

nameorscalaVersionin the examples above) - you created it as a custom key

- you imported a plugin that brought it into the build definition.

You can also type help <key> at the sbt prompt for more information.

Defining tasks and settings

Using :=, you can assign a value to a setting and a computation to a

task. For a setting, the value will be computed once at project load

time. For a task, the computation will be re-run each time the task is

executed.

For example, to implement the hello task from the previous section:

lazy val hello = taskKey[Unit]("An example task")

lazy val root = (project in file("."))

.settings(

hello := { println("Hello!") }

)

We already saw an example of defining settings when we defined the project’s name,

lazy val root = (project in file("."))

.settings(

name := "hello"

)

Types for tasks and settings

From a type-system perspective, the Setting created from a task key is

slightly different from the one created from a setting key.

taskKey := 42 results in a Setting[Task[T]] while settingKey := 42

results in a Setting[T]. For most purposes this makes no difference; the

task key still creates a value of type T when the task executes.

The T vs. Task[T] type difference has this implication: a setting can’t

depend on a task, because a setting is evaluated only once on project

load and is not re-run. More on this in task graph.

Keys in sbt shell

In sbt shell, you can type the name of any task to execute

that task. This is why typing compile runs the compile task. compile is

a task key.

If you type the name of a setting key rather than a task key, the value

of the setting key will be displayed. Typing a task key name executes

the task but doesn’t display the resulting value; to see a task’s

result, use show <task name> rather than plain <task name>. The

convention for keys names is to use camelCase so that the command line

name and the Scala identifiers are the same.

To learn more about any key, type inspect <keyname> at the sbt

interactive prompt. Some of the information inspect displays won’t make

sense yet, but at the top it shows you the setting’s value type and a

brief description of the setting.

Imports in build.sbt

You can place import statements at the top of build.sbt; they need not

be separated by blank lines.

There are some implied default imports, as follows:

import sbt._

import Keys._

(In addition, if you have auto plugins, the names marked under autoImport will be imported.)

Bare .sbt build definition

The settings can be written directly into the build.sbt file instead of

putting them inside a .settings(...) call. We call this the “bare style.”

ThisBuild / version := "1.0"

ThisBuild / scalaVersion := "2.12.18"

This syntax is recommended for ThisBuild scoped settings and adding plugins.

See later section about the scoping and the plugins.

Adding library dependencies

To depend on third-party libraries, there are two options. The first is

to drop jars in lib/ (unmanaged dependencies) and the other is to add

managed dependencies, which will look like this in build.sbt:

val derby = "org.apache.derby" % "derby" % "10.4.1.3"

ThisBuild / organization := "com.example"

ThisBuild / scalaVersion := "2.12.18"

ThisBuild / version := "0.1.0-SNAPSHOT"

lazy val root = (project in file("."))

.settings(

name := "Hello",

libraryDependencies += derby

)

This is how you add a managed dependency on the Apache Derby library, version 10.4.1.3.

The libraryDependencies key involves two complexities: += rather than

:=, and the % method. += appends to the key’s old value rather than

replacing it, this is explained in

Task Graph. The %

method is used to construct an Ivy module ID from strings, explained in

Library dependencies.

We’ll skip over the details of library dependencies until later in the Getting Started Guide. There’s a whole page covering it later on.

Multi-project builds

This page introduces multiple subprojects in a single build.

Please read the earlier pages in the Getting Started Guide first, in particular you need to understand build.sbt before reading this page.

Multiple subprojects

It can be useful to keep multiple related subprojects in a single build, especially if they depend on one another and you tend to modify them together.

Each subproject in a build has its own source directories, generates its own jar file when you run package, and in general works like any other project.

A project is defined by declaring a lazy val of type Project. For example, :

lazy val util = (project in file("util"))

lazy val core = (project in file("core"))

The name of the val is used as the subproject’s ID, which is used to refer to the subproject at the sbt shell.

Optionally the base directory may be omitted if it’s the same as the name of the val.

lazy val util = project

lazy val core = project

Build-wide settings

To factor out common settings across multiple subprojects,

define the settings scoped to ThisBuild.

ThisBuild acts as a special subproject name that you can use to define default

value for the build.

When you define one or more subprojects, and when the subproject does not define

scalaVersion key, it will look for ThisBuild / scalaVersion.

The limitation is that the right-hand side needs to be a pure value

or settings scoped to Global or ThisBuild,

and there are no default settings scoped to subprojects. (See Scopes)

ThisBuild / organization := "com.example"

ThisBuild / version := "0.1.0-SNAPSHOT"

ThisBuild / scalaVersion := "2.12.18"

lazy val core = (project in file("core"))

.settings(

// other settings

)

lazy val util = (project in file("util"))

.settings(

// other settings

)

Now we can bump up version in one place, and it will be reflected

across subprojects when you reload the build.

Common settings

Another way to factor out common settings across multiple projects is to

create a sequence named commonSettings and call settings method

on each project.

lazy val commonSettings = Seq(

target := { baseDirectory.value / "target2" }

)

lazy val core = (project in file("core"))

.settings(

commonSettings,

// other settings

)

lazy val util = (project in file("util"))

.settings(

commonSettings,

// other settings

)

Dependencies

Projects in the build can be completely independent of one another, but usually they will be related to one another by some kind of dependency. There are two types of dependencies: aggregate and classpath.

Aggregation

Aggregation means that running a task on the aggregate project will also run it on the aggregated projects. For example,

lazy val root = (project in file("."))

.aggregate(util, core)

lazy val util = (project in file("util"))

lazy val core = (project in file("core"))

In the above example, the root project aggregates util and core. Start

up sbt with two subprojects as in the example, and try compile. You

should see that all three projects are compiled.

In the project doing the aggregating, the root project in this case,

you can control aggregation per-task. For example, to avoid aggregating

the update task:

lazy val root = (project in file("."))

.aggregate(util, core)

.settings(

update / aggregate := false

)

[...]

update / aggregate is the aggregate key scoped to the update task. (See

scopes.)

Note: aggregation will run the aggregated tasks in parallel and with no defined ordering between them.

Classpath dependencies

A project may depend on code in another project. This is done by adding

a dependsOn method call. For example, if core needed util on its

classpath, you would define core as:

lazy val core = project.dependsOn(util)

Now code in core can use classes from util. This also creates an

ordering between the projects when compiling them; util must be updated

and compiled before core can be compiled.

To depend on multiple projects, use multiple arguments to dependsOn,

like dependsOn(bar, baz).

Per-configuration classpath dependencies

core dependsOn(util) means that the compile configuration in core depends

on the compile configuration in util. You could write this explicitly as

dependsOn(util % "compile->compile").

The -> in "compile->compile" means “depends on” so "test->compile"

means the test configuration in core would depend on the compile

configuration in util.

Omitting the ->config part implies ->compile, so

dependsOn(util % "test") means that the test configuration in core depends

on the Compile configuration in util.

A useful declaration is "test->test" which means test depends on test.

This allows you to put utility code for testing in util/src/test/scala

and then use that code in core/src/test/scala, for example.

You can have multiple configurations for a dependency, separated by

semicolons. For example,

dependsOn(util % "test->test;compile->compile").

Inter-project dependencies

On extremely large projects with many files and many subprojects, sbt can perform less optimally at continuously watching files that have changed and use a lot of disk and system I/O.

sbt has trackInternalDependencies and exportToInternal

settings. These can be used to control whether a dependent subproject should

trigger compilation of its dependencies when you call compile. Both keys will

take one of three values: TrackLevel.NoTracking,

TrackLevel.TrackIfMissing, and TrackLevel.TrackAlways. By default

they are both set to TrackLevel.TrackAlways.

When trackInternalDependencies is set to

TrackLevel.TrackIfMissing, sbt will no longer try to compile

internal (inter-project) dependencies automatically, unless there are

no *.class files (or JAR file when exportJars is true) in the

output directory.

When the setting is set to TrackLevel.NoTracking, the compilation of

internal dependencies will be skipped. Note that the classpath will

still be appended, and dependency graph will still show them as

dependencies. The motivation is to save the I/O overhead of checking

for the changes on a build with many subprojects during

development. Here’s how to set all subprojects to TrackIfMissing.

ThisBuild / trackInternalDependencies := TrackLevel.TrackIfMissing

ThisBuild / exportJars := true

lazy val root = (project in file("."))

.aggregate(....)

The exportToInternal setting allows the dependee subprojects to opt

out of the internal tracking, which might be useful if you want to

track most subprojects except for a few. The intersection of the

trackInternalDependencies and exportToInternal settings will be

used to determine the actual track level. Here’s an example to opt-out

one project:

lazy val dontTrackMe = (project in file("dontTrackMe"))

.settings(

exportToInternal := TrackLevel.NoTracking

)

Default root project

If a project is not defined for the root directory in the build, sbt creates a default one that aggregates all other projects in the build.

Because project hello-foo is defined with base = file("foo"), it will be

contained in the subdirectory foo. Its sources could be directly under

foo, like foo/Foo.scala, or in foo/src/main/scala. The usual sbt

directory structure applies underneath foo with the

exception of build definition files.

Navigating projects interactively

At the sbt interactive prompt, type projects to list your projects and

project <projectname> to select a current project. When you run a task

like compile, it runs on the current project. So you don’t necessarily

have to compile the root project, you could compile only a subproject.

You can run a task in another project by explicitly specifying the

project ID, such as subProjectID/compile.

Common code

The definitions in .sbt files are not visible in other .sbt files. In

order to share code between .sbt files, define one or more Scala files

in the project/ directory of the build root.

See organizing the build for details.

Appendix: Subproject build definition files

Any .sbt files in foo, say foo/build.sbt, will be merged with the build

definition for the entire build, but scoped to the hello-foo project.

If your whole project is in hello, try defining a different version

(version := "0.6") in hello/build.sbt, hello/foo/build.sbt, and

hello/bar/build.sbt. Now show version at the sbt interactive prompt. You

should get something like this (with whatever versions you defined):

> show version

[info] hello-foo/*:version

[info] 0.7

[info] hello-bar/*:version

[info] 0.9

[info] hello/*:version

[info] 0.5

hello-foo/*:version was defined in hello/foo/build.sbt,

hello-bar/*:version was defined in hello/bar/build.sbt, and

hello/*:version was defined in hello/build.sbt. Remember the

syntax for scoped keys. Each version key is scoped to a

project, based on the location of the build.sbt. But all three build.sbt

are part of the same build definition.

Style choices:

- Each subproject’s settings can go into

*.sbtfiles in the base directory of that project, while the rootbuild.sbtdeclares only minimum project declarations in the form oflazy val foo = (project in file("foo"))without the settings. - We recommend putting all project declarations and settings in the root

build.sbtfile in order to keep all build definition under a single file. However, it’s up to you.

Note: You cannot have a project subdirectory or project/*.scala files in the

sub-projects. foo/project/Build.scala would be ignored.

Task graph

Continuing from build definition,

this page explains build.sbt definition in more detail.

Rather than thinking of settings as key-value pairs,

a better analogy would be to think of it as a directed acyclic graph (DAG)

of tasks where the edges denote happens-before. Let’s call this the task graph.

Terminology

Let’s review the key terms before we dive in.

- Setting/Task expression: entry inside

.settings(...). - Key: Left hand side of a setting expression. It could be a

SettingKey[A], aTaskKey[A], or anInputKey[A]. - Setting: Defined by a setting expression with

SettingKey[A]. The value is calculated once during load. - Task: Defined by a task expression with

TaskKey[A]. The value is calculated each time it is invoked.

Declaring dependency to other tasks

In build.sbt DSL, we use .value method to express the dependency to

another task or setting. The value method is special and may only be

called in the argument to := (or, += or ++=, which we’ll see later).

As a first example, consider defining the scalacOptions that depends on

update and clean tasks. Here are the definitions of these keys (from Keys).

Note: The values calculated below are nonsensical for scalaOptions,

and it’s just for demonstration purpose only:

val scalacOptions = taskKey[Seq[String]]("Options for the Scala compiler.")

val update = taskKey[UpdateReport]("Resolves and optionally retrieves dependencies, producing a report.")

val clean = taskKey[Unit]("Deletes files produced by the build, such as generated sources, compiled classes, and task caches.")

Here’s how we can rewire scalacOptions:

scalacOptions := {

val ur = update.value // update task happens-before scalacOptions

val x = clean.value // clean task happens-before scalacOptions

// ---- scalacOptions begins here ----

ur.allConfigurations.take(3)

}

update.value and clean.value declare task dependencies,

whereas ur.allConfigurations.take(3) is the body of the task.

.value is not a normal Scala method call. build.sbt DSL

uses a macro to lift these outside of the task body.

Both update and clean tasks are completed

by the time task engine evaluates the opening { of scalacOptions

regardless of which line it appears in the body.

See the following example:

ThisBuild / organization := "com.example"

ThisBuild / scalaVersion := "2.12.18"

ThisBuild / version := "0.1.0-SNAPSHOT"

lazy val root = (project in file("."))

.settings(

name := "Hello",

scalacOptions := {

val out = streams.value // streams task happens-before scalacOptions

val log = out.log

log.info("123")

val ur = update.value // update task happens-before scalacOptions

log.info("456")

ur.allConfigurations.take(3)

}

)

Next, from sbt shell type scalacOptions:

> scalacOptions

[info] Updating {file:/xxx/}root...

[info] Resolving jline#jline;2.14.1 ...

[info] Done updating.

[info] 123

[info] 456

[success] Total time: 0 s, completed Jan 2, 2017 10:38:24 PM

Even though val ur = ... appears in between log.info("123") and

log.info("456") the evaluation of update task happens before

either of them.

Here’s another example:

ThisBuild / organization := "com.example"

ThisBuild / scalaVersion := "2.12.18"

ThisBuild / version := "0.1.0-SNAPSHOT"

lazy val root = (project in file("."))

.settings(

name := "Hello",

scalacOptions := {

val ur = update.value // update task happens-before scalacOptions

if (false) {

val x = clean.value // clean task happens-before scalacOptions

}

ur.allConfigurations.take(3)

}

)

Next, from sbt shell type run then scalacOptions:

> run

[info] Updating {file:/xxx/}root...

[info] Resolving jline#jline;2.14.1 ...

[info] Done updating.

[info] Compiling 1 Scala source to /Users/eugene/work/quick-test/task-graph/target/scala-2.12/classes...

[info] Running example.Hello

hello

[success] Total time: 0 s, completed Jan 2, 2017 10:45:19 PM

> scalacOptions

[info] Updating {file:/xxx/}root...

[info] Resolving jline#jline;2.14.1 ...

[info] Done updating.

[success] Total time: 0 s, completed Jan 2, 2017 10:45:23 PM

Now if you check for target/scala-2.12/classes/,

it won’t exist because clean task has run even though it is inside

the if (false).

Another important thing to note is that there’s no guarantee

about the ordering of update and clean tasks.

They might run update then clean, clean then update,

or both in parallel.

Inlining .value calls

As explained above, .value is a special method that is used to express

the dependency to other tasks and settings.

Until you’re familiar with build.sbt, we recommend you

put all .value calls at the top of the task body.

However, as you get more comfortable, you might wish to inline the .value calls

because it could make the task/setting more concise, and you don’t have to

come up with variable names.

We’ve inlined a few examples:

scalacOptions := {

val x = clean.value

update.value.allConfigurations.take(3)

}

Note whether .value calls are inlined, or placed anywhere in the task body,

they are still evaluated before entering the task body.

Inspecting the task

In the above example, scalacOptions has a dependency on

update and clean tasks.

If you place the above in build.sbt and

run the sbt interactive console, then type inspect scalacOptions, you should see

(in part):

> inspect scalacOptions

[info] Task: scala.collection.Seq[java.lang.String]

[info] Description:

[info] Options for the Scala compiler.

....

[info] Dependencies:

[info] *:clean

[info] *:update

....

This is how sbt knows which tasks depend on which other tasks.

For example, if you inspect tree compile you’ll see it depends on another key

incCompileSetup, which it in turn depends on

other keys like dependencyClasspath. Keep following the dependency chains and magic happens.

> inspect tree compile

[info] compile:compile = Task[sbt.inc.Analysis]

[info] +-compile:incCompileSetup = Task[sbt.Compiler$IncSetup]

[info] | +-*/*:skip = Task[Boolean]

[info] | +-compile:compileAnalysisFilename = Task[java.lang.String]

[info] | | +-*/*:crossPaths = true

[info] | | +-{.}/*:scalaBinaryVersion = 2.12

[info] | |

[info] | +-*/*:compilerCache = Task[xsbti.compile.GlobalsCache]

[info] | +-*/*:definesClass = Task[scala.Function1[java.io.File, scala.Function1[java.lang.String, Boolean]]]

[info] | +-compile:dependencyClasspath = Task[scala.collection.Seq[sbt.Attributed[java.io.File]]]

[info] | | +-compile:dependencyClasspath::streams = Task[sbt.std.TaskStreams[sbt.Init$ScopedKey[_ <: Any]]]

[info] | | | +-*/*:streamsManager = Task[sbt.std.Streams[sbt.Init$ScopedKey[_ <: Any]]]

[info] | | |

[info] | | +-compile:externalDependencyClasspath = Task[scala.collection.Seq[sbt.Attributed[java.io.File]]]

[info] | | | +-compile:externalDependencyClasspath::streams = Task[sbt.std.TaskStreams[sbt.Init$ScopedKey[_ <: Any]]]

[info] | | | | +-*/*:streamsManager = Task[sbt.std.Streams[sbt.Init$ScopedKey[_ <: Any]]]

[info] | | | |

[info] | | | +-compile:managedClasspath = Task[scala.collection.Seq[sbt.Attributed[java.io.File]]]

[info] | | | | +-compile:classpathConfiguration = Task[sbt.Configuration]

[info] | | | | | +-compile:configuration = compile

[info] | | | | | +-*/*:internalConfigurationMap = <function1>

[info] | | | | | +-*:update = Task[sbt.UpdateReport]

[info] | | | | |

....

When you type compile sbt automatically performs an update, for example. It

Just Works because the values required as inputs to the compile

computation require sbt to do the update computation first.

In this way, all build dependencies in sbt are automatic rather than explicitly declared. If you use a key’s value in another computation, then the computation depends on that key.

Defining a task that depends on other settings

scalacOptions is a task key.

Let’s say it’s been set to some values already, but you want to

filter out "-Xfatal-warnings" and "-deprecation" for non-2.12.

lazy val root = (project in file("."))

.settings(

name := "Hello",

organization := "com.example",

scalaVersion := "2.12.18",

version := "0.1.0-SNAPSHOT",

scalacOptions := List("-encoding", "utf8", "-Xfatal-warnings", "-deprecation", "-unchecked"),

scalacOptions := {

val old = scalacOptions.value

scalaBinaryVersion.value match {

case "2.12" => old

case _ => old filterNot (Set("-Xfatal-warnings", "-deprecation").apply)

}

}

)

Here’s how it should look on the sbt shell:

> show scalacOptions

[info] * -encoding

[info] * utf8

[info] * -Xfatal-warnings

[info] * -deprecation

[info] * -unchecked

[success] Total time: 0 s, completed Jan 2, 2017 11:44:44 PM

> ++2.11.8!

[info] Forcing Scala version to 2.11.8 on all projects.

[info] Reapplying settings...

[info] Set current project to Hello (in build file:/xxx/)

> show scalacOptions

[info] * -encoding

[info] * utf8

[info] * -unchecked

[success] Total time: 0 s, completed Jan 2, 2017 11:44:51 PM

Next, take these two keys (from Keys):

val scalacOptions = taskKey[Seq[String]]("Options for the Scala compiler.")

val checksums = settingKey[Seq[String]]("The list of checksums to generate and to verify for dependencies.")

Note: scalacOptions and checksums have nothing to do with each other.

They are just two keys with the same value type, where one is a task.

It is possible to compile a build.sbt that aliases scalacOptions to

checksums, but not the other way. For example, this is allowed:

// The scalacOptions task may be defined in terms of the checksums setting

scalacOptions := checksums.value

There is no way to go the other direction. That is, a setting key can’t depend on a task key. That’s because a setting key is only computed once on project load, so the task would not be re-run every time, and tasks expect to re-run every time.

// Bad example: The checksums setting cannot be defined in terms of the scalacOptions task!

checksums := scalacOptions.value

Defining a setting that depends on other settings

In terms of the execution timing, we can think of the settings as a special tasks that evaluate during loading time.

Consider defining the project organization to be the same as the project name.

// name our organization after our project (both are SettingKey[String])

organization := name.value

Here’s a realistic example.

This rewires Compile / scalaSource key to a different directory

only when scalaBinaryVersion is "2.11".

Compile / scalaSource := {

val old = (Compile / scalaSource).value

scalaBinaryVersion.value match {

case "2.11" => baseDirectory.value / "src-2.11" / "main" / "scala"

case _ => old

}

}

What’s the point of the build.sbt DSL?

We use the build.sbt domain-specific language (DSL) to construct a DAG of settings and tasks.

The setting expressions encode settings, tasks and the dependencies among them.

This structure is common to Make (1976), Ant (2000), and Rake (2003).

Intro to Make

The basic Makefile syntax looks like the following:

target: dependencies

[tab] system command1

[tab] system command2

Given a target (the default target is named all),

- Make checks if the target’s dependencies have been built, and builds any of the dependencies that hasn’t been built yet.

- Make runs the system commands in order.

Let’s take a look at a Makefile:

CC=g++

CFLAGS=-Wall

all: hello

hello: main.o hello.o

$(CC) main.o hello.o -o hello

%.o: %.cpp

$(CC) $(CFLAGS) -c $< -o $@

Running make, it will by default pick the target named all.

The target lists hello as its dependency, which hasn’t been built yet, so Make will build hello.

Next, Make checks if the hello target’s dependencies have been built yet.

hello lists two targets: main.o and hello.o.

Once those targets are created using the last pattern matching rule,

only then the system command is executed to link main.o and hello.o to hello.

If you’re just running make, you can focus on what you want as the target,

and the exact timing and commands necessary to build the intermediate products are figured out by Make.

We can think of this as dependency-oriented programming, or flow-based programming.